When algorithms failed us.

Bias in

ML and AI

The trouble with intuition

As humans, we tend to over-rely on “hunches”; that feeling somewhere in our guts that guides some of our decisions. These gut feelings might be very useful for situations in which instant choices are required, but more often than not, they take us to the wrong path—and we’re not always the only ones harmed. In other instances, we might have the time to make balanced and careful considerations by gathering and analyzing evidence before making a decision. However, our brains are not designed to process big amounts of information very well, which leads us to, once again, rely on mental shortcuts to reduce mental effort, simplify and act upon it.

More formally, what I’m describing above is known as “cognitive bias,” which leads us to create our own “subjective reality,” resulting in perceptual distortions, inaccurate judgments, illogical interpretations, or what is broadly called irrationality[i]. These processes are mainly unconscious[ii] and universally recognized as playing a role in discrimination[iii].

Bias is like fog you’ve been breathing in your whole life

Some cognitive biases are more harmful than others. Particularly, implicit bias —our tendency to have attitudes towards people without our conscious knowledge— can profoundly affect how we interact with others. Research suggests that, because of it, “people can act based on prejudice and stereotypes without intending to do so[iv].” In other words, and appealing to a widely-used analogy, implicit bias is like fog you’ve been breathing in your whole life. And if bias is fog, people will have a hard time perceiving the world as it really is[v].

Photo by Sherise VD on Unsplash

Is AI the answer?

Companies welcome algorithms as a refreshing antidote; they’re said to improve decision-making and be fairer in the process[vi] by reducing, even eliminating, human subjective interpretation of data. Yet, many worry that algorithms may bake in and scale human and societal biases, failing to provide services to people equally and transparently[vii]. Here I present examples illustrating how the so-called “AI” can fail the people it’s built to serve.

Bias in ML

Examples of how AI failed the people they're built to serve.

The first example shows the results of an experimental setting by researchers at CMU. They found out that male browsers are four times more likely than female browsers to see a job-related Ad promising high salaries. Hence, the algorithm used by Google Ads is biased towards men. But why? Unfortunately, as previously discussed, algorithms are also prone to biases that render their decisions unfair.

The data was collected by creating 10,000 fresh browser instances and randomly assigning them to treatment (namely, “female”) and control (“male”) groups. The browsers visited 100 employment websites (Indeed, Glassdoor, and Monster, among others) and finally visited the Times of India to capture the Ads shown to these browsers.

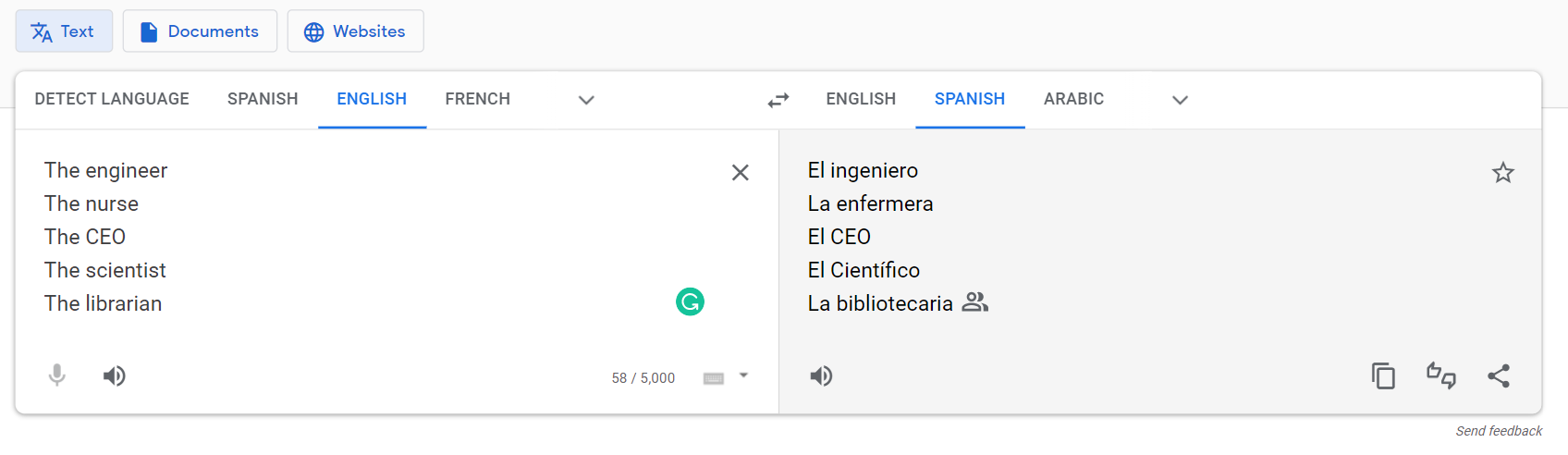

The following example shows the pronouns used by the Google Translate API when translating different job positions from English to non-gender-neutral languages such as Spanish. For example, take a look at the results above; for job occupations like “engineer” and “CEO,” the algorithm chooses a male pronoun in Spanish, whereas for “nurse” and “librarian,” it chooses a female pronoun. This is very problematic for reasons that go beyond the scope of this work. However, I will insist on the following: algorithms should mitigate these biases to avoid eliciting their users to unfair and discriminatory associations—between job occupations and sex, in this case.

In the example on the right, we see that the women’s appearance percentage in the top 100 images shown by Google Images strongly correlates with the Bureau of Labor Statistics estimates of women’s participation in the workforce. And what’s interesting (but not in a positive way) is that for job occupations in which women are overrepresented (model, flight attendant, among others), Google Images is more like to show images of women. This is also problematic because it reinforces gender stereotypes that some organizations and individuals are trying so hard to fight[viii] [ix].

Should AI and ML show the world as it is or as it should be?

In the literature, there is some discussion around whether algorithms should show the world as it is (discriminatory and sexist, unfortunately) or should take a step further and apply affirmative actions to help historically disadvantaged populations. Going back to the Google Images on the right, some would say that the algorithm just replicates what it’s seeing in the world, thus not introducing any bias. Still, some would argue that it’s not enough, and researchers and practitioners should introduce debiasing techniques to show, at a minimum, a 50/50 distribution for each occupation between women and men, for example.

Some can even have deadly consequences...

The examples that I showed before might be subtle and don’t directly affect people’s lives. But some others can have deadly consequences. In my final example, we’ll see an algorithm used to manage the population’s health that exhibits racial bias; sicker Black patients are assigned the same level of risk as healthier White patients. According to the researchers[x], the bias arises because the algorithm predicts health care costs rather than illness. However, the algorithm does not consider unequal access to care —the Black population is widely known to be at a disadvantage—which, again, translates into Black patients not receiving the care they need. Finally, the researchers argue that “remedying this disparity would increase the percentage of Black patients receiving additional help from 17.7 to 46.5%.”

Bias and Fairness are complex notions

There’s no “one size fits all” solution. Researchers and the scientific community have proposed different definitions of bias and fairness in ML, and they all depend on the problem’s context and the task at hand. An ML algorithm can be fair through “unawareness” (when protected attributes such as sex are not explicitly used in the training/learning process) or by applying debiasing techniques based on different metrics. Additionally, it also depends on whether the practitioners are looking for individual fairness (give similar predictions to similar individuals) and group fairness (“treat different groups equally). If you want to dig deeper, the following article presents a comprehensive taxonomy of fairness in ML:

Ninareh Mehrabi et al., “A Survey on Bias and Fairness in Machine Learning,” ArXiv:1908.09635 [Cs], January 25, 2022, http://arxiv.org/abs/1908.09635.

The advantage

The good news is that, unlike humans, algorithms can be opened up, examined, and interrogated. These are the recommendations presented by McKinsey Global Institute.

Photo by Barney Yau on Unsplash

And what can you do?

Source: McKinsey Global Institute

1. Be aware of contexts in which AI can help correct for bias and those in which there is a high risk to exacerbate bias

2. Establish processes and practices to test for and mitigate bias in AI systems

3. Invest more in bias research, make more data available for research, and adopt a multidisciplinary approach.

4. Engage in fact-based conversations about potential biases in human decisions.

Are you an ML practitioner?

There's extensive research about preventing and mitigating biases from machine learning algorithms and AI. Additionally, building on previous research, companies like IBM[3] have developed toolkits in R and Python that allow ML practitioners to debias their algorithms and ensure the outcomes are fair.

References

[i] “Cognitive Bias,” in Wikipedia, February 22, 2022, https://en.wikipedia.org/w/index.php?title=Cognitive_bias&oldid=1073455512.

[ii] “Unconscious Bias: What It Is and How to Avoid It in the Workplace,” Ivey Business School, accessed February 27, 2022, https://www.ivey.uwo.ca/academy/blog/2019/09/unconscious-bias-what-it-is-and-how-to-avoid-it-in-the-workplace/.

[iii] “3 Cognitive Biases Perpetuating Racism at Work - and How to Overcome Them,” World Economic Forum, accessed February 27, 2022, https://www.weforum.org/agenda/2020/08/cognitive-bias-unconscious-racism-moral-licensing/.

[iv] Michael Brownstein, “Implicit Bias,” in The Stanford Encyclopedia of Philosophy, ed. Edward N. Zalta, Fall 2019 (Metaphysics Research Lab, Stanford University, 2019), https://plato.stanford.edu/archives/fall2019/entries/implicit-bias/.

[v] An Introduction to Implicit Bias: Knowledge, Justice, and the Social Mind. United Kingdom: Routledge, 2020.

[vi] Silberg, J. and Manyika, J., “Notes from the AI Frontier: Tackling Bias in AI (and in Humans)” (McKinsey Global Institute, June 2019), https://www.mckinsey.com/~/media/mckinsey/featured%20insights/artificial%20intelligence/tackling%20bias%20in%20artificial%20intelligence%20and%20in%20humans/mgi-tackling-bias-in-ai-june-2019.pdf.

[vii] Ibid.

[viii] https://plus.google.com/+UNESCO, “Help Us Fight against Harmful Gender Norms and Stereotypes!,” UNESCO, September 16, 2021, https://en.unesco.org/news/help-us-fight-against-harmful-gender-norms-and-stereotypes.

[ix] “How to Beat Gender Stereotypes: Learn, Speak up and React,” World Economic Forum, accessed March 3, 2022, https://www.weforum.org/agenda/2019/03/beat-gender-stereotypes-learn-speak-up-and-react/.

[x] Ziad Obermeyer et al., “Dissecting Racial Bias in an Algorithm Used to Manage the Health of Populations,” 2019, 8. https://www.science.org/doi/10.1126/science.aax2342