Political Content Moderation on Social Media needs to Change

The spread of misinformation & hate speech on social media platforms has become a defining political, cultural, and economic issue of our time.

image by pch.vector from freepik

Localized Solutions over Universal Approaches

Global companies are searching for universal solutions, yet different societies have varying needs and sensitivities, and attitudes toward moderation. Some countries want more moderation and some want less.

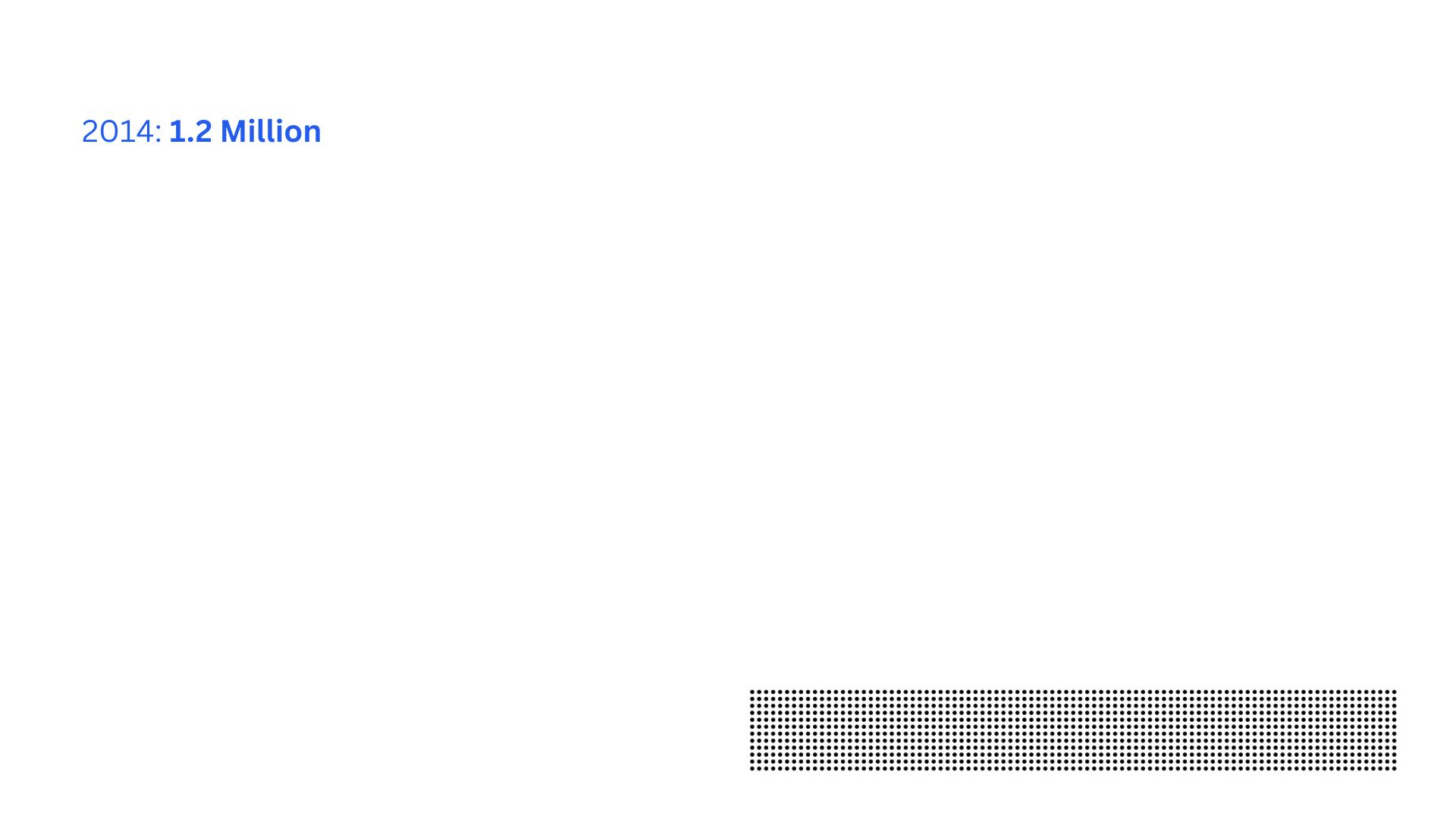

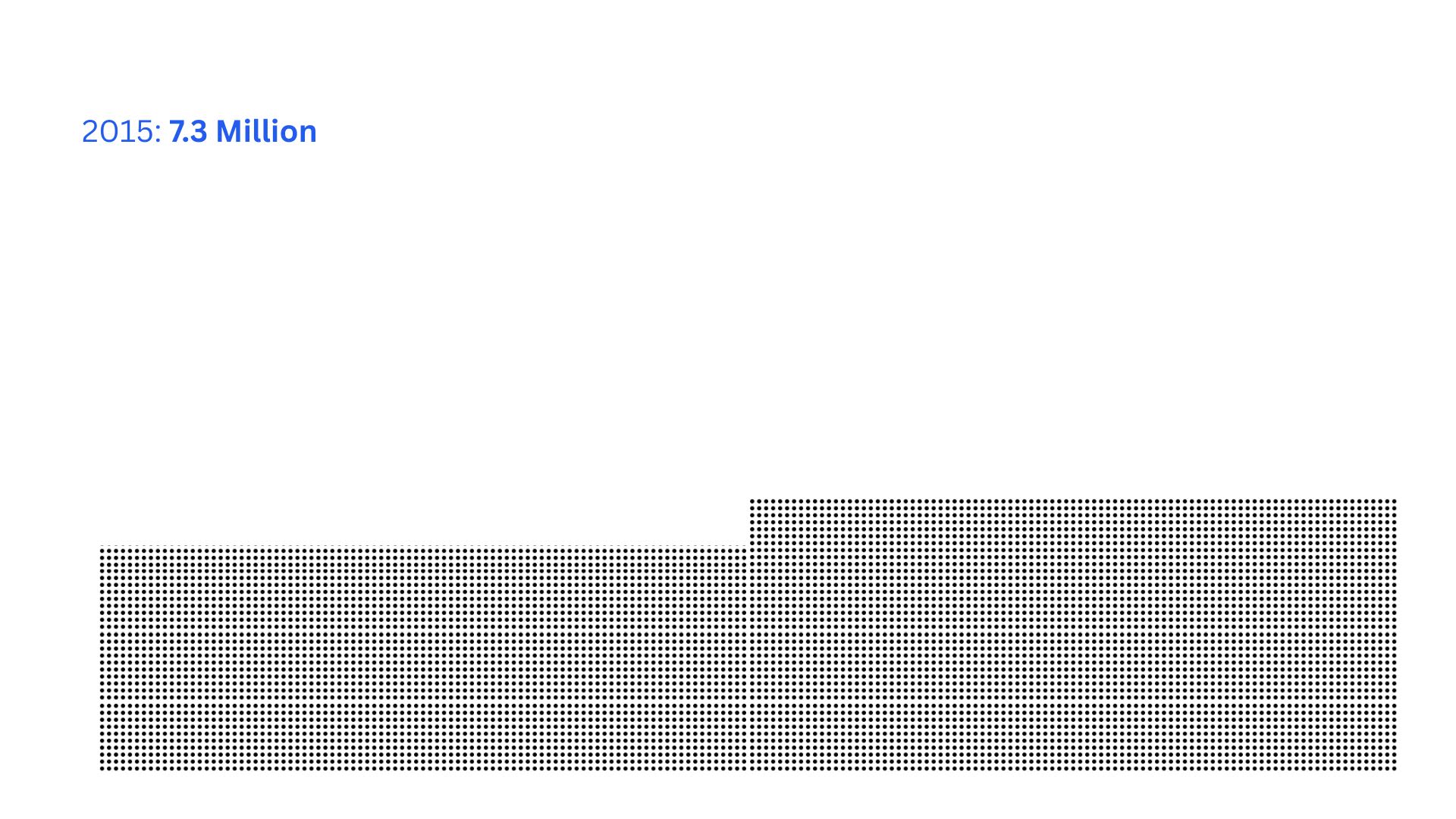

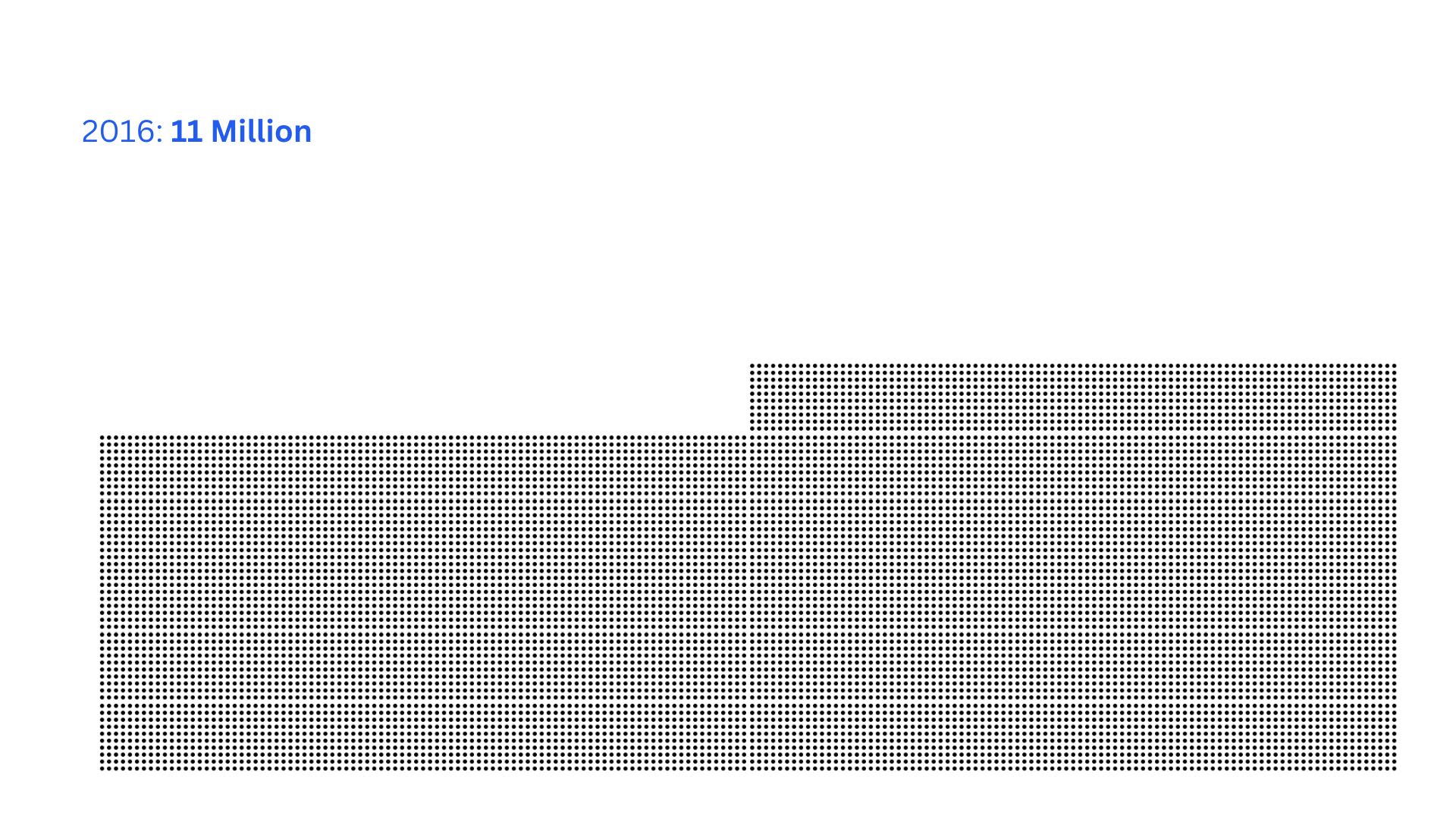

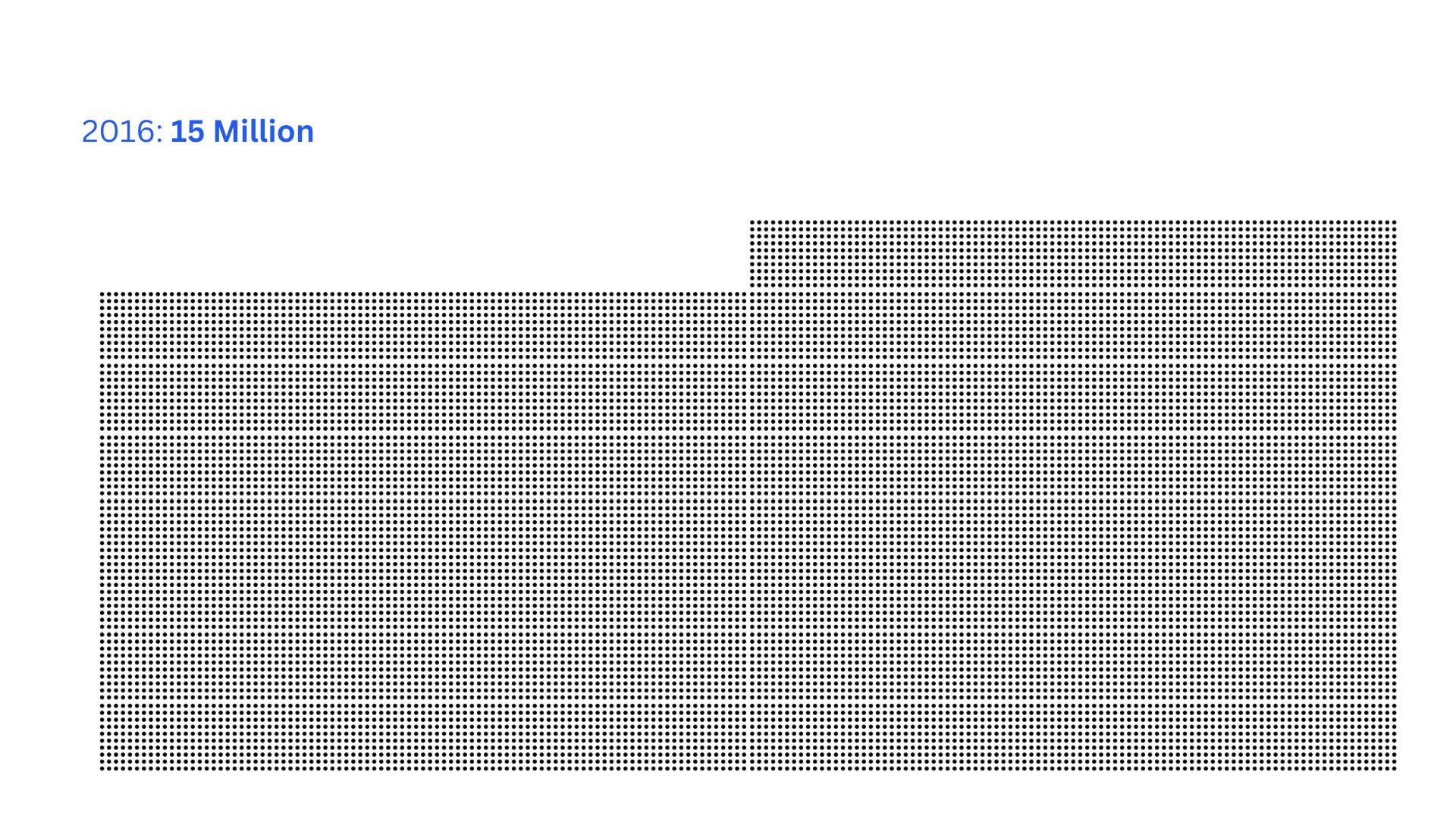

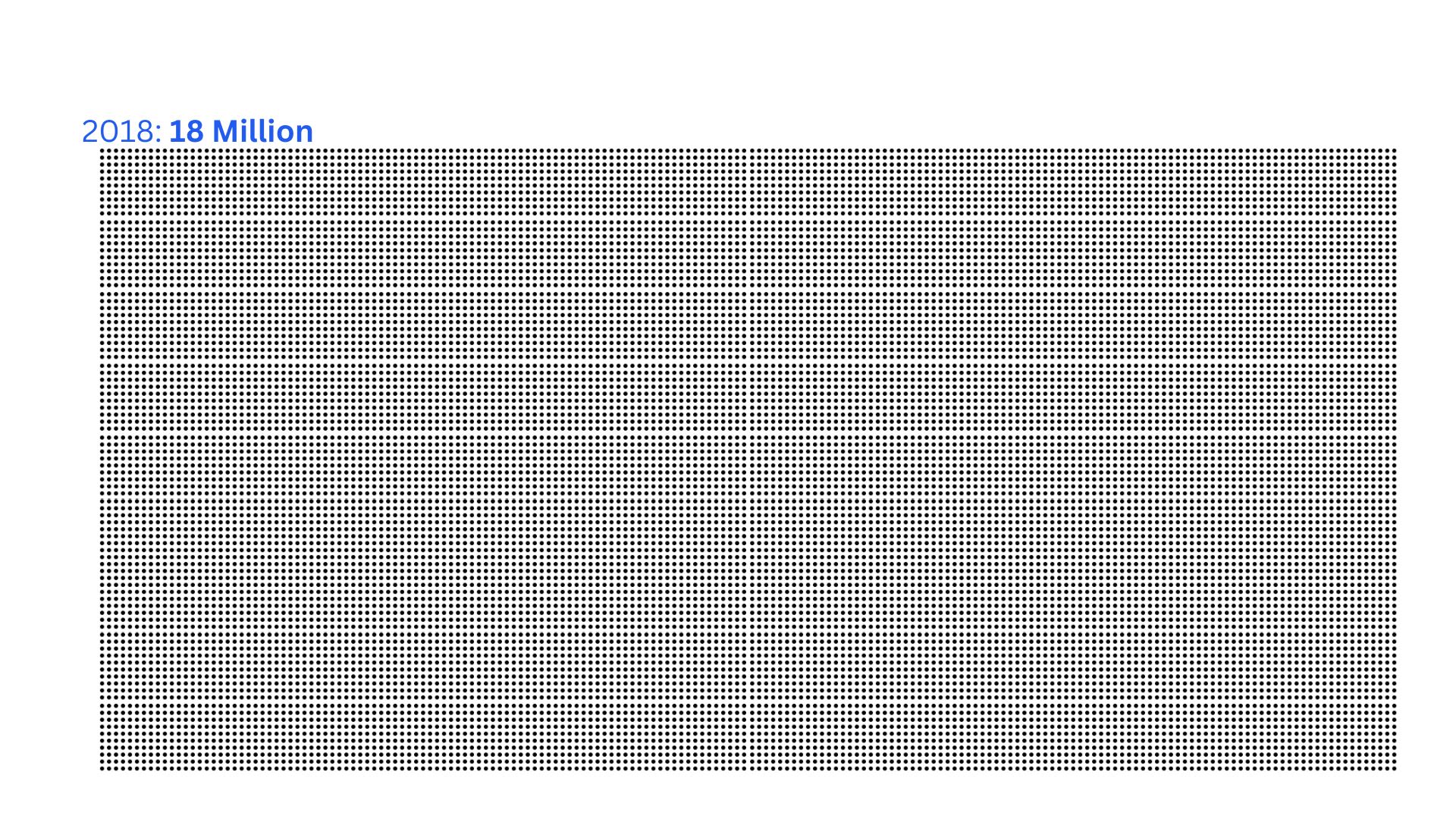

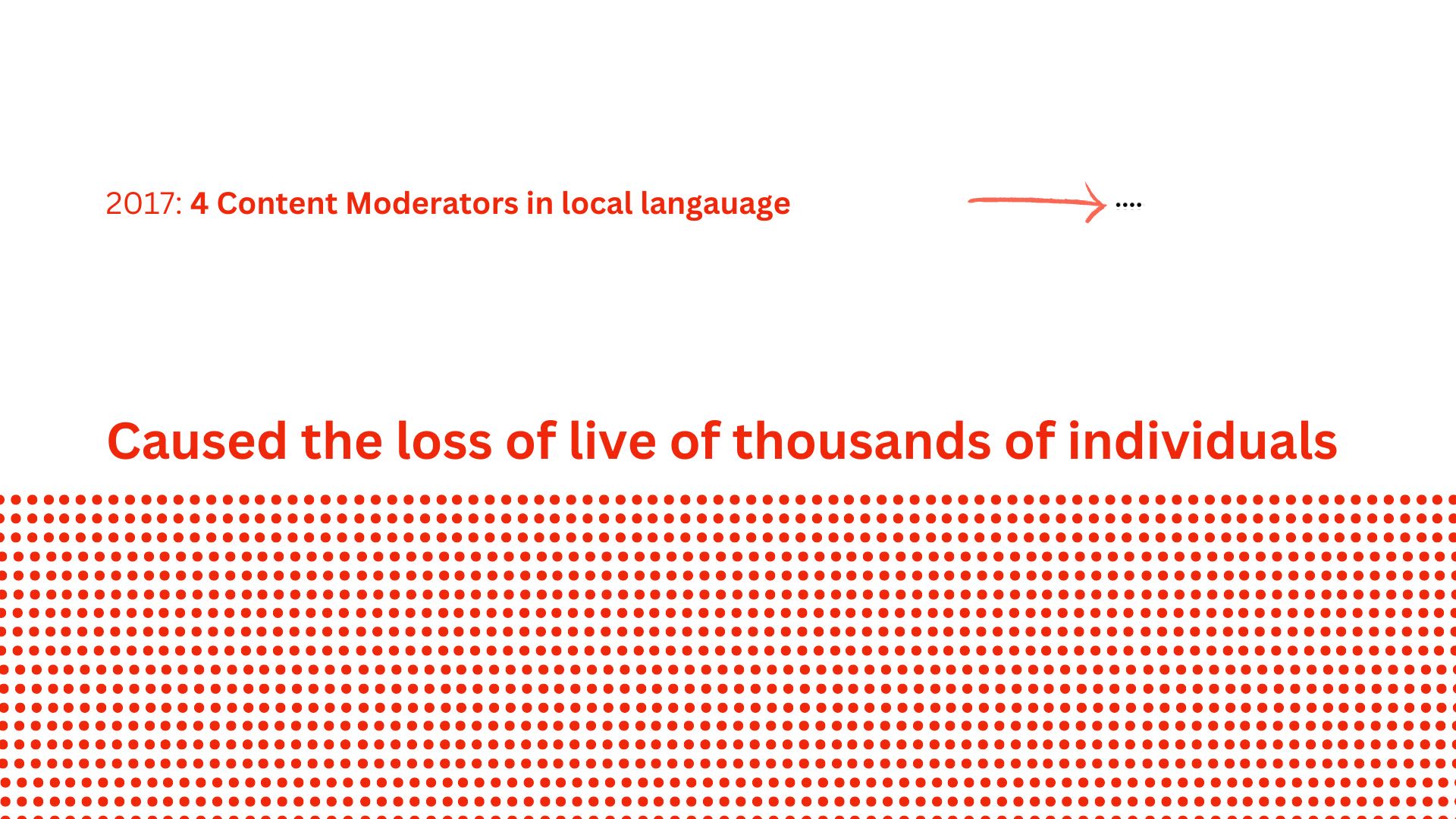

In 2017 Facebook posts were used as means of inciting violence against Muslims in the country. Facebook’s algorithm amplified these stories and the platform failed to regulate hateful and fake posts, which spread misinformation about the minority group, leading to national outrage and over death and displacement of thousands of people.

This incident indicates how significant the problem of content moderation is on social media platforms.

.

.

.

.

.

.

.

.

.

.

..

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.A

.

.

.

.

.

Algorithms curating content have changed the way we consume and share media,

Our minds have been primed to pick up negative and oftentimes more extreme content

The visual above shows all the headlines that were shared from new sources on Facebook, these headlines were then tagged by category to indicate whether it was related to politics, technology, economy, or Palestine. From the data we see that political news is shared the most and of the sources shared Breitbart articles had the highest shares.

Breitbart has a reputation for featuring content that is misogynistic, xenophobic, and racist according to academics and journalists.

What should platforms be doing?

Change the Algorithms

Show individuals more diverse content that is not biased. Limit the virality of hateful content

Researchers from the difficult conversation Lab at Columbia have found conversations between people having opposing views go better when more layers to the story/opinion are shared.

Provide more Transparency

Do a better job informing people why posts are removed /what is acceptable

Provide more data to researchers and provide reports on the effects of misinformation and abuse.

Protect all People Equally

Understand how misinformation and abuse work in specific communities and find localized methods to prevent it

learn more about ways you can support the cause

Sources:

[1] https://www.reuters.com/investigates/special-report/myanmar-facebook-hate/

[4]https://www.pewresearch.org/journalism/2021/09/20/news-consumption-across-social-media-in-2021/

Datasets

https://www.kaggle.com/datasets/rishidamarla/social-media-posts-from-us-politicians

https://www.kaggle.com/datasets/socialmedianews/how-news-appears-on-social-media